Looking for a suitable computer to virtualize the services I use, I came across the Fujitsu Futro S920, a thin client that is especially popular for projects with pfSense or OPNSense because of its expandability and low power consumption.

I then tried using it as a Homelab Server with Proxmox Virtual Environment, ZFS and a cloud backup strategy.

Specifications and upgrades

The Futro S920 in its main configuration has an AMD GX-415GA processor with 4 cores and 4 threads, supports up to 16 GB of RAM, and has a PCI Express port that with the help of a riser allows it to be expanded with an additional network card, for example.

All for a cost of about 40 to 50 euros on Ebay.

My model came with a 16GB mSATA disk that I upgraded with a 128GB one I got on Aliexpress for about 12 euros, 4GB of RAM, which I upgraded to 16.

For my Homelab Server I wanted mirrored SSD storage to be made with ZFS, the Futro has only one very inconvenient SATA port mounted under the heatsink, however, it has a mini PCI Express expansion slot which I took advantage of by adding a card with two SATA ports like this one.

It is possible to power the two SATA disks using the 4pin floppy drive port and a two-port SATA cable such as this one:

The end result is as follows:

NIC 2.5Gb/s

After upgrading my router to take full advantage of my 2.5Gb/s FTTH, I decided to upgrade my Futro’s network card.

I purchased this Intel I225-V controller-based network card, and to connect it I used a PCIe x1 riser taken from Aliexpress, it is preferable to be flexible so as not to risk not being able to close the case.

Having done the first tests, however, I began to notice that performance did not seem to be the best, in speedtests I could only reach 1.7Gb/s when from the tests done by my router the maximum was 2.2Gb/s.

Server: TIM SpA - Ancona (id: 54071)

ISP: Telecom Italia

Idle Latency: 1.73 ms (jitter: 0.39ms, low: 1.69ms, high: 2.46ms)

Download: 1700.13 Mbps (data used: 959.7 MB)

5.49 ms (jitter: 1.05ms, low: 2.08ms, high: 11.39ms)

Upload: 1003.23 Mbps (data used: 700.9 MB)

7.20 ms (jitter: 0.49ms, low: 5.17ms, high: 8.53ms)

Packet Loss: 0.0%

Doing some research and coming to a post on the ServeTheHome forum it appears that the PCIe slot on the Futro is set to the default Gen1 speed.

You can confirm this by using the lspci -vvv command and check LnkSta on the network card:

LnkSta: Speed 2.5GT/s (downgraded), Width x1

Fortunately, it is possible to set up Gen2 on PCIe in the BIOS; to do so, you need to use the Editcmos.exe tool by running it from FreeDOS.

FreeDOS is a DOS-compatible operating system that will allow us to upgrade our BIOS and run Editcmos.exe even if we do not have a Windows operating system.

We prepare a USB drive by downloading the FullUSB image and flashing it with dd

dd if=FD13FULL.img of=/dev/sdb bs=4M

When finished, we copy the BIOS update found from the Fujitsu download center and the Editcmos.exe tool to the root of FreeDOS.

We boot the DOS environment and after performing the BIOS update we give this command to enable Gen2 speed on the PCIe

> EditCMOS.exe SetID:0x01B7=0x0151

Let’s go back to Proxmox and check

LnkSta: Speed 5GT/s, Width x1

After this command, the speedtest comes to maximum performance

Speedtest by Ookla

Server: Vodafone IT - Milan (id: 4302)

ISP: Telecom Italia

Idle Latency: 11.34 ms (jitter: 0.81ms, low: 10.90ms, high: 12.19ms)

Download: 2156.85 Mbps (data used: 2.4 GB)

37.78 ms (jitter: 33.07ms, low: 9.85ms, high: 335.34ms)

Upload: 1029.52 Mbps (data used: 998.2 MB)

23.81 ms (jitter: 1.74ms, low: 10.82ms, high: 36.42ms)

Packet Loss: 0.0%

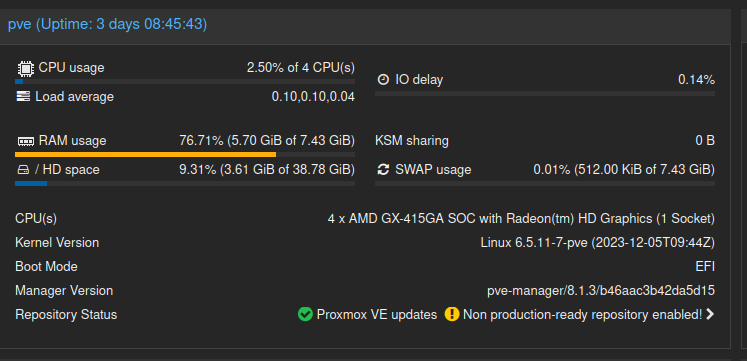

Proxmox Virtual Environment

I chose to use a hypervisor like PVE because I wanted to use LXC containers and because of the support for ZFS.

Having installed Proxmox the first step to take if you do not want to purchase a support license is to disable enteriprise repositories and enable no-subscription repositories.

The only difference is that enterprise ones are more suitable for production environments because they are more tested.

To do this, simply go to your node > Updates > Repositories.

Staying in the node I then created a mirrored ZFS poll by going to Disks > ZFS, compression saves disk space at the expense of a slight increase in CPU load

My server currently hosts 3 LXC containers: PiHole, HomeAssistant and Grocy and performance seems good.

Cloud backup

Set to PVE the classic container backups on internal storage, in this case two mirrored disks with ZFS I wanted to also have a copy of the backups on cloud in case of disaster recovery.

I used rclone, a client that allows you to synchronize files with more than 70 storage providers including Google Drive, OneDrive and Proton Drive (my case).

Through the PVE shell I installed rclone

curl https://rclone.org/install.sh | bash

and configured my remote

rclone config

Proxmox allows you to start a script at the end of backup processes by passing job-end as a parameter to the script at the end of the process.

So I prepared this script that synchronizes the backups folder with my remote on the cloud at the end of backup creation.

#!/bin/sh

write_msg()

{

echo $(date +"20%y-%m-%d %H:%M:%S") $1

}

duration()

{

DURATION=$2

HOUR=$((DURATION/3600))

HOURINSEC=$((HOUR*3600))

DURATION=$((DURATION-HOURINSEC))

MINUTE=$(((DURATION/60)))

SECOND=$((DURATION%60))

write_msg "$1 finished took $HOUR hours, $MINUTE minutes, $SECOND seconds"

}

if [ $1 = "job-end" ]

then

START=$(date +%s)

write_msg "Starting rclone sync"

CMD="rclone sync --progress --stats-one-line --stats=30s --config /root/.config/rclone/rclone.conf /Storage/VMBACKUPS/ ProtonDrive:/Homelab/PVE"

write_msg "using command: $CMD"

$CMD

END=$(date +%s)

duration "rclone" $((END-START))

fi

exit 0

/Storage/VMBACKUPS/ is the directory of my backups on PVE, ProtonDrive is the name of my remote on rclone, and /Homelab/PVE is the directory where I want to save my backups to the cloud.

I saved the script as rclone_backup.sh in my root and gave it execution permissions

chmod +x rclone_backup.sh

to start it after the backups are complete, you need to edit the /etc/vzdump.conf file by removing the comment from the script entry and inserting the path

script:/root/rclone_backup.sh

Conclusions

The Futro S920 is an interesting thin client as a homelab server without too much pretension, the real bottleneck, however, is the 8GB of RAM, which with PVE and ZFS can be poor.

For now it meets my needs, in the future it would be interesting to buy another one to cluster or who knows, maybe two more so I can experiment with an HA cluster.